University Project

8 Months

Google Home, Surveys, Interviews

Solo Project

Overview

Imagine having a helpful voice assistant in your home, like the ones you can talk to through smart speakers or smartphones. These voice assistants have become popular and are expected to be worth $23.8 billion by 2026. But here's the thing:

Most people treat voice assistants like servants, just giving instructions instead of having a friendly chat. That's where empathy and social skills come in.

If these voice assistants could understand our emotions and respond in a friendlier way, it would mean:

Improved Interactions

More Potential Use Cases

More User Engagement

That's why this study is about creating an empathetic voice assistant called EmoRe (pronounced Eemoray), which would recognise our emotions through our speech and respond with empathy, making our conversations feel more natural and enjoyable.

My Role - HCI Researcher

As the sole person working on this project, I had the exciting task of handling everything from start to finish. I took charge of all the project requirements, which involved:

Conducting thorough research.

Designing voice interactions.

Implementing the designs.

Conducting usability tests.

Side Note: I also explored voice-based machine learning algorithms to understand the current technological capabilities in emotion recognition. However, it is out of the scope of this case study.

What to expect?

In this case study, we dive into the exciting world of interaction design, usability evaluation, and insightful discussions with our participants. By the end of it all, you can look forward to:

An empathetic response mapping, where we define the emotional reactions EmoRe provides for specific triggers.

User feedback, both in numbers and personal experiences, about EmoRe's current empathetic abilities.

Valuable insights from users on the rise of Empathetic Voice Assistants, like EmoRe.

It's an exploration you would not want to miss!

The Process

Understanding Empathy and User Needs

Designing Empathetic Conversations

Implementing the Design

What do the Users Think?

Post-study Interviews

Understanding Empathy & User Expectations

To establish a strong foundation in empathetic interactions, I started by studying existing literature on empathy and emotional behaviours.

Understanding the different perspectives on empathy, I realised that for a voice assistant to truly support its users, it should display empathy towards them. This meant that:

The voice assistant should strive to comprehend the users' emotions and respond caringly, fostering a sense of care and understanding.

While digging deeper into emotional behaviours, I stumbled upon Hippocrates’ four moods or temperaments, which were:

Sanguine

Optimistic, Energetic, and Upbeat

Choleric

Irritable, Impulsive, and Bold

Phlegmatic

Calm, Slow, and Deliberate

Melancholic

Moody, Withdrawn, and Pessimistic

When mapping emotional reactions based on empathy, it was crucial to understand different types of empathetic responses. Through my investigation, I identified two approaches:

Express emotions similar to what the user feels

Parallel Responses

Recommended for positive emotional triggers, aiming to resonate and connect with the user's positive state

Focus on acknowledging and addressing the user's emotional state

Reactive Responses

Recommended for negative emotional triggers, aiming to alleviate or support the users

Further, I made another significant observation regarding emotional interaction through audio-based speech. To effectively convey emotions, it is essential to consider both the verbal content and the tone of voice, which means that when designing the desired response:

We must carefully choose the words and pay attention to the emotional expression conveyed through the voice.

Lastly, research on user expectations highlighted an important finding:

Users do not expect machines, artificial intelligence, or voice assistants to display any form of negative emotions.

Understanding and implementing this knowledge is crucial for EmoRe to effectively engage with users and establish a meaningful emotional connection. Moreover, EmoRe can align with user expectations and create a more pleasant and comforting interaction experience by focusing on positive and supportive responses.

Designing Empathetic Conversations

With a newly achieved understanding of empathetic interactions and user expectations, I was eager to design the empathetic trigger-response mapping for EmoRe.

To begin, I needed to define the trigger emotions that would elicit empathetic responses from the voice assistant. For simplicity and relevance, I chose the following emotions that are commonly expressed towards voice assistants, as identified through recent investigations:

Happiness

Anger

Sadness

Fear

Excitement

Drawing upon the insights gathered from the previous steps, I formulated the empathetic response mapping as follows:

EmoRe’s Response to Happy Users

EmoRe’s Response to Excited Users

EmoRe’s Response to Sad Users

EmoRe’s Response to Fearful Users

EmoRe’s Response to Angry Users

Implementing the Design

To ensure the practicality and effectiveness of the design, it was essential to bring it to life in a prototype (or a PoC). I accomplished this by implementing the design into a Google Home device.

Using Dialogflow, I programmed Google Home to emulate EmoRe, and understand and respond to different trigger emotions. I manipulated the voice assistant's pitch, speech rate, and volume to express the desired mood.

NOTE: Before finalizing the implementation, I conducted a remote preliminary test with willing participants to fine-tune the pitch, speech rate, and volume manipulation. The feedback and insights helped me refine the design and ensure that EmoRe effectively conveyed the desired emotions in its responses.

As part of the implementation process, I created five scenarios representing the different trigger emotions and how EmoRe should respond empathetically. These scenarios served as examples to showcase the desired empathetic interactions:

-

Scenario ID: S1

Scenario: Customer Service

User Emotion: Angry

Description: User Speaking to an automated Customer Care Service to resolve a problem.

Type: Task-Oriented

-

Scenario ID: S2

Scenario: Client Meeting

User Emotion: Fearful/Uneasy

Description: First time using a voice assistant to book a restaurant for an important client meeting.

Type: Task-Oriented

-

Scenario ID: S3

Scenario: Movie Ticket Booking

User Emotion: Excited

Description: Child/Teenager booking tickets to a long-awaited movie.

Type: Task-Oriented

-

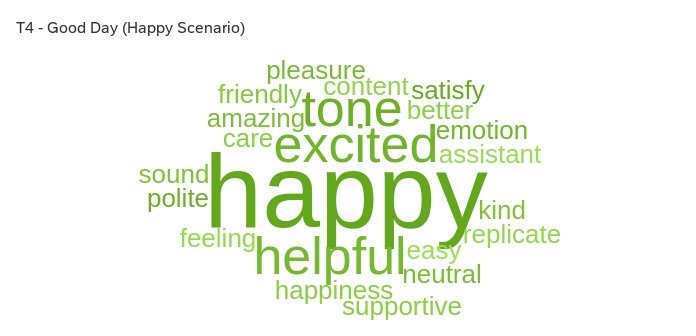

Scenario ID: S4

Scenario: A Good Day

User Emotion: Happy

Description: Employee coming home after a Good Day at Work.

Type: Conversational

-

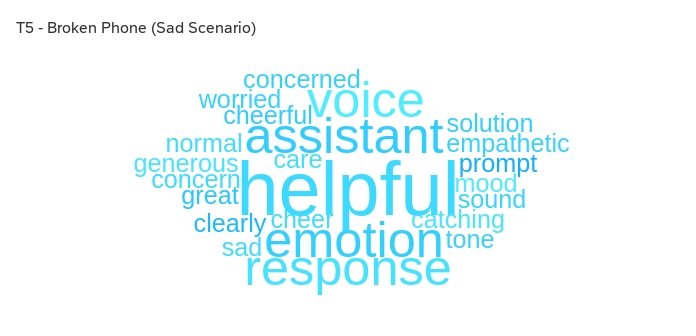

Scenario ID: S5

Scenario: Broken Phone

User Emotion: Sad

Description: The user just broke his/her phone and wants to get it repaired.

Type: Task-oriented

By creating these scenarios, I aimed to demonstrate how EmoRe would respond empathetically to various trigger emotions, providing a realistic and engaging user experience.

What do Users Think?

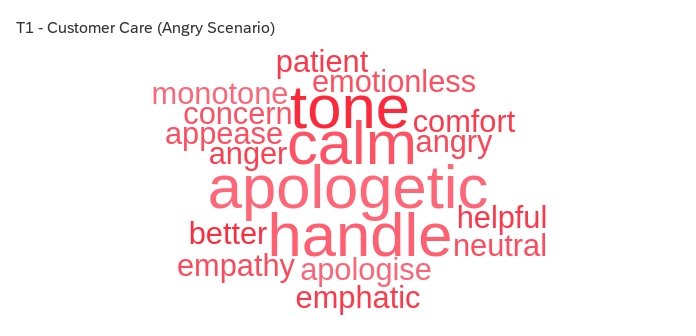

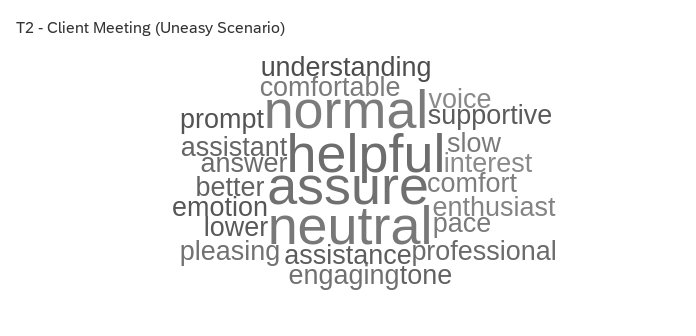

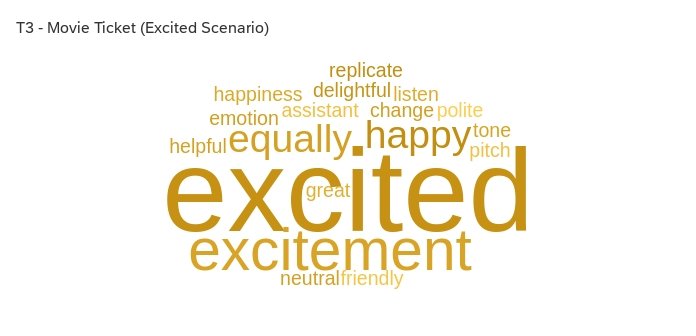

To evaluate the empathetic abilities of EmoRe and gather valuable insights, I conducted a user study involving 14 participants. The study involved task-based observations where participants interacted with EmoRe in the previously mentioned scenarios. Additionally, I administered surveys to collect quantitative feedback on EmoRe's empathetic responses.

Furthermore, I aimed to gain qualitative feedback from the participants to understand their perspectives on EmoRe's capabilities and empathetic voice assistants. Through semi-structured interviews and open-ended questions, I encouraged participants to share their thoughts, experiences, and suggestions.

Study Design

-

Participants interacted with EmoRe through various scenarios, and their responses were observed. After each scenario, participants were asked to share their perceived emotions from EmoRe's response. This provided insights into how well EmoRe conveyed emotions and connected with users.

-

Participants were asked to rate EmoRe's empathetic abilities using a combination of standardised surveys that assessed perceived empathy.

-

In post-study interviews, participants had the opportunity to share their overall experience and perspectives regarding EmoRe and the concept of empathetic voice assistants. The interview questions focused on various topics, such as:

Users’ experience with the voice assistant

Users’ perception of the emotions expressed by EmoRe

Whether users considered EmoRe empathetic

Users’ beliefs on whether voice assistants should exhibit emotions

Users’ willingness to engage with an emotionally expressive voice assistant,

Any feedback or suggestions users had

Laboratory Setup

User Feedback

The user feedback received in terms of quantitative ratings was highly satisfactory. The survey results revealed that EmoRe's empathetic abilities were rated with a mean score of:

43.43 of 50 or approx. 87%

This excellent score indicates that participants perceived EmoRe to be empathetic.

Additionally, although some mixed or conflicting emotional perceptions were explicitly related to the negative emotional triggers, the overall perception of emotions conveyed by EmoRe was highly successful.

These findings highlight the effectiveness of the empathetic trigger-response mapping and the ability of EmoRe to evoke emotional responses in users.

However, the qualitative feedback generated some valuable insights that brought my morale down and shed light on the much-needed improvement of my design.

Post-study Interviews

Users had a satisfying experience interacting with the empathetic voice assistant, and were attracted to its novelty and conversational nature. However, they also identified some potential issues.

Response to Negative Emotions

It is more important to handle negative emotions accurately and promptly.

Personalised responses based on personality and situation are needed, especially in scenarios involving sadness.

Need for Accurate Emotion Recognition and Well-defined Boundaries

Prevent inappropriate responses and avoid robot domination.

Create separate rules or laws for machines and limit the assistants' empathetic capabilities.

Privacy and Trust

Strong privacy policies, such as giving users control over data sharing, and implementing age restrictions or parental locks to protect vulnerable users, are essential.

Despite these concerns, empathetic voice assistants were seen as potentially beneficial. They could provide companionship for lonely individuals, assist in emotional intelligence training, and bridge the gap between machines and humans. However, there were worries about addiction, the loss of human connection, and the power of AI.

Final Thoughts

Reflections

Reflections on Empathetic Assistants

Prioritising negative emotion handling is more crucial than addressing positive emotions.

Empathetic assistants offer potential benefits, including companionship, emotional intelligence training, and enhanced interaction.

Establishing clear boundaries and limitations is necessary to prevent inappropriate responses and avoid robot domination.

Strong privacy policies and user control over data sharing are imperative.

Striking a balance between risks and potential benefits is essential.

Reflections on the Study

Design is an ongoing and evolving process.

User studies should not rely solely on quantitative results; qualitative insights hold greater value.

Next Steps

Address concerns and insights from the user study.

Account for a broader range of emotions, including secondary and tertiary emotions.

Consider the influence of mood on empathetic responses and explore mood thresholding techniques for more natural interaction.

Conculsion

As users embraced the positive aspects of empathetic voice assistants, they recognised the need to confront the challenges ahead. They emphasised the importance of responsible development and ethical use of these technologies. Their appreciation for the benefits of empathetic voice assistants was accompanied by a desire to address concerns and establish safeguards, ensuring that these assistants enhance human experiences without diminishing the value of genuine human connections.

Thank You

This project would not have been possible without the support of my supervisor, the university lab assistant, and all the participants who volunteered their time and energy to this project.

Other Relevant Work